Vidya Balan Becomes The Latest Victim Of Misleading AI-Generated Videos, Urges Everyone To Be Cautious!

Recently, Vidya Balan took to her Instagram and addressed a fake video circulating online.

Fake videos made by AI (artificial intelligence) are becoming a massive problem, and they’re causing worry and confusion. These videos can spread quickly online, and celebrities are often targeted because their pictures and videos are easy to find. The latest victim of fake AI-generated content is actress Vidya Balan, who recently had to address a fake video circulating online. Read on to know more!

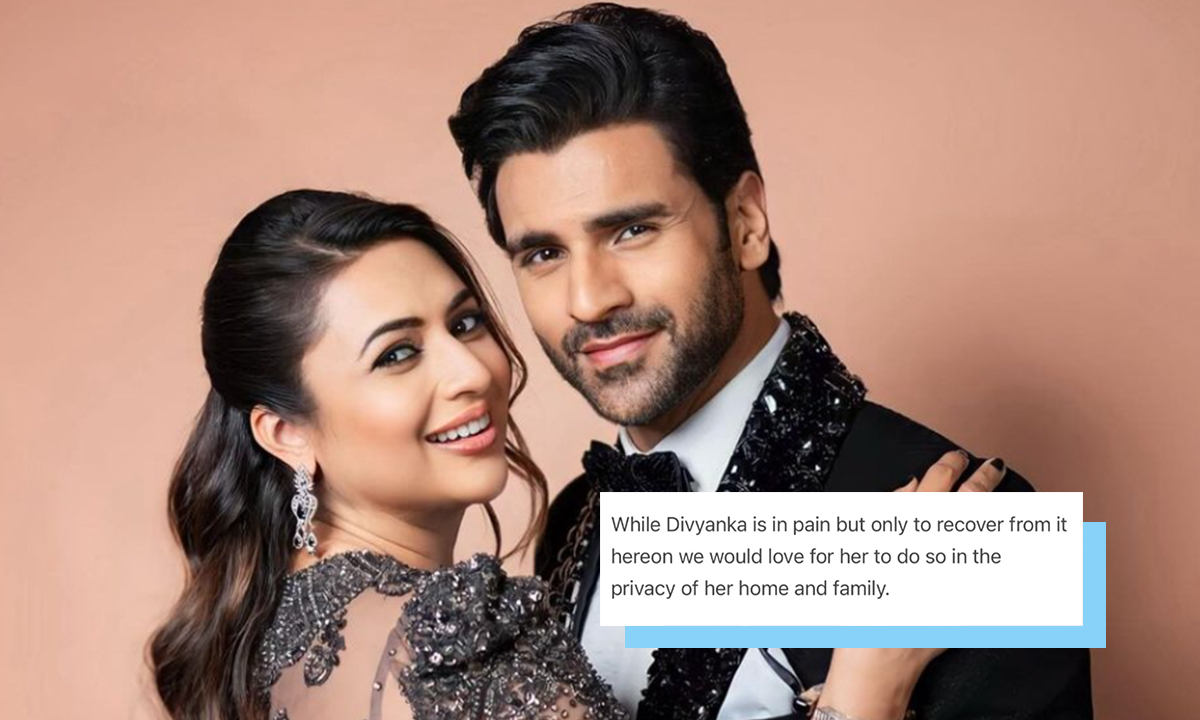

Vidya Balan On Misleading AI-Generated Videos

Vidya Balan discovered that several videos seemingly featuring her were being widely shared across social media platforms and messaging apps like WhatsApp. These videos were not genuine and were created using artificial intelligence. It is a technology that allows for the manipulation of visual and audio content to create convincing but false representations. Upon discovering the deepfake videos, Vidya Balan took to her Instagram account to alert her fans and followers.

The actress clarified that she had no involvement in the creation or distribution of these videos. She stated that the content of the videos did not reflect her views or work in any way.Vidya Balan wanted to make it clear that any claims made within the fabricated videos should not be linked to her. She strongly advised everyone to be cautious about misleading AI-generated content and urged them to verify information before sharing it. Check out the video here!

View this post on Instagram

Vidya Balan’s experience is far from isolated. Alia Bhatt, Ranveer Singh, and numerous other celebrities have also dealt with the disturbing reality of deepfake videos. These incidents have sparked widespread concern, highlighting the urgent need for strict measures to tackle this growing threat. What do you think?

Also Read: Alia Bhatt’s AI-Manipulated GRWM Video Goes Viral On Internet, Fans Find Deepfake “Scary”

First Published: March 02, 2025 2:25 PM